Award and Prizes

Award

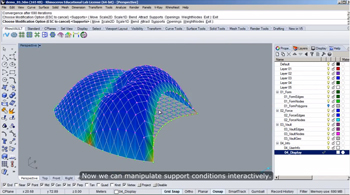

RhinoVAULT

Program specifications

ALGOrithmic Design type

Researcher

ETH Zurich, BLOCK Research Group, PhD Candidate

(registration : ETH Zurich, DARCH - ITA, PhD Candidate)

Program has been published

lecture symposium exhibition,website,peer reviewed paper

IJSS

group members

Lorenz Lachauer, Philippe Block

Review : Theodore Spyropoulos

lmn architecture

Program specifications

ALGOrithmic Design type

Office

Nikken Sekkei, Architectural, DDL

group members

Tsunoda Daisuke

Review : Michael Hansmeyer

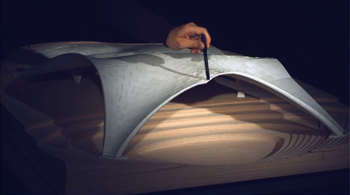

The LMN Architecture project proposes a decentralized participative approach for the design of buildings using a web-based design tool. These can either be original designs, or they can be further developments of existing designs. Users can also evaluate designs by assigning points. The system’s logic works by accruing points from children’s evaluations to parent designs based on similarity calculations. It is thus possible to rank designs according to their desirability. It is also possible to see how designs originated based on a tree-diagram, and to see how they potentially relate to their parents, siblings, or children.

The entire system is very well designed; it is robust and easy-to-use. Though the design features are limited, the system is relatively open-ended and non-prescriptive. Within the realms of its modular logic, a variety of designs are feasible. The system would nonetheless benefit from a less formally limited solution space. Perhaps the tool could even be extended to allow the evaluation of existing buildings.

The logic of the inherited rankings is as effective as it is simple. On a broad level, there is even an incentive system for the designers as the author proposes to share any winnings from the ALGODeQ competition with the users that created the best design proposals.

The designs that achieve the highest points are surprisingly different from one another. The system appears to allow for multiple parallel champions rather than fostering a convergence. It would be interesting to see whether there would be a higher convergence if there were a need to adhere to a context or a more stringent briefing, e.g. site requirements or programmatic requirements.

Given that the system runs on a web site and should be scalable, it may be interesting to run multiple versions of it in parallel. Depending on their geographic location, users could be routed to a different version of the system. One could thus examine how different designs involve based on preferences of different regions.

It may also be interesting to complement the users’ evaluations with purely algorithmic ones. With relatively simple algorithms, it’s possible to evaluate features such as amount of sunlight, degree of privacy from street, inner connectivity of rooms, desirability of the view from the house, etc. Such an evaluatory algorithm could potentially intervene in the process, or it could be used to measure the users’ preferences, i.e. to see which features users appear to be optimizing for. Could the users compete with the algorithmic generation and evaluation? How could the two possibly interact?

LMN Architecture makes a convincing case for the potential of participatory design in architecture. It is a robust, innovative system that is only waiting to be expanded.

Kangaroo

Program specifications

ALGOrithmic Design type

Office

independent

Program has been published

book magazine

Architectural Design vol 83 pages 136-137

Review : Taro Narahara

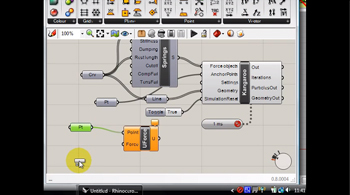

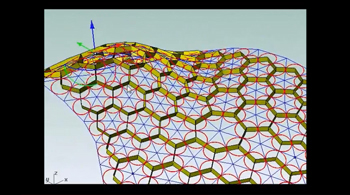

Who does not know the “Kangaroo” plug-in for Rhinoceros? At least you must have heard of its name if you are one of those computational architects who actively use digital software. Simply looking at its penetration rate, its significance in our community is undeniable. Of course, the competition’s evaluation criteria are not about the ownership rate of software. However, the creative outcomes produced by students and professionals using it, all across the world, have proved its significant contribution.

Two primary things that I looked for from all entries are versatility and originality. I must say that this entry satisfies probably the highest usability and versatility levels, compared to others. One might say that implementation of a physics engine on any existing application or programming language is not exactly original or new. For example, major CAD applications such as Maya and 3D MAX have had their rigid-body dynamics as a default feature for a while, and there have been many existing physics engine libraries for various programming languages such as Open Dynamics Engine (ODE) and Bullet for C++. In architectural software applications, there are not many examples released as a form of official software like this tool. However, for example, I have seen many similar particle systems produced by colleagues in architecture using processing, and this alone indicates strong interest in the area among architects. In fact, a particle system with masses connected with a network of springs using a finite time difference method for a simulation using Newtonian mechanics seems a fairly common approach nowadays, and it is often a first coding step for anyone taking a computer graphics course from any CS department.

However, all that said, I argue that the developer made an extremely smart decision to choose one of the most popular and well-distributed environments among architects, Rhinoceros, through the use of an intuitive and painless interface built upon the Grasshopper plug-in, and he introduced a dynamic world of physics to non-programmers and even beginning software users. The work requires completely different effort and knowledge in a specific SDK platform compared to, for example, writing a code using processing. The tool was also independently developed by the contestant alone instead of an army of programmers, and the performance of the software is fairly stable and robust. Additionally, I would like to mention that another entry, “In258 iGeo,” demonstrated an equally high skill level in computing to “tu142 Kangaroo”. There are many overlaps between the two tools in terms of capabilities and features, and the iGeo has shown its applications on larger-scale built structures from professional practices. Probably due to evaluation criteria such as “ease of use” in the guidelines and overall impact among architects at all levels, Kangaroo received more votes this time, but both tools demonstrated nearly equally impressive results.

Furthermore, Kangaroo comes with features that are not normally implemented inside a typical physics engine, such as the generation of circle-packing meshes, which obviously requires high-level understanding of mathematical principles and computational skills. All these new additional features are tailored for architectural design purposes; software with this level of high-end customization for architectural users is unprecedented. Since the software has already been around for a while and is known to the majority of the people reading this, the selection might not give you a huge surprise or a discovery. Yet, in conclusion, it would be a crime not to select an accomplishment of this magnitude, and I voted for this entry as one of the two best selections.

Prizes

iGeo

Program specifications

ALGOrithmic Design type

Office

ATLV, computational design, founder

Program has been published

website

public web launch

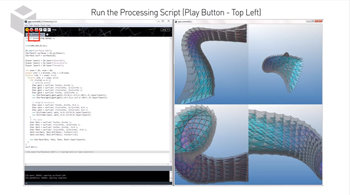

Review : Taichi Sunayama

Over the past fifteen years, the technological advancements in 3D CAD software formulated the new morphological expression in the contemporary discourses of Architecture / Design / Art. In particular, VB/C#-based scripting language "RhinoScript" and the visual programming language "Grasshopper" are the central figure of developing this new design expression called "computational design". Both of these development environments are based on 3D CAD software "Rhinoceros" which is widely used in design practice and also educational field nowadays.

On the other hand, the free Java-based scripting environment has been promoted around the field of visual design and interactive arts since 2001. Today, there are tens of thousands of students, artists, designers, researchers, and hobbyists who use Processing for learning, prototyping, and production.

Comparison between these two major computational design tools, RhinoScript features linear operation (=non-iterative), Processing is useful for the iteration (i.e L-system, agent base simulation) by contrast.

This Processing library "igeo" seems to be based on the principle of the RhinoScript coding. Including NURBS surfaces, mesh modeling, vector mathematics, surface sub-divided tessellation, recursive iterative geometry and more, "igeo" compiled these fundamental computational design elements on Processing.

By transporting the concept of RhinoScript to Processing, "igeo” does not only make the linkage between scripting platforms, but also makes the fusion of these two different computational design processes. Namely non-iterative and iterative. Non-iterative processes are top-down design processes (e.g. surface-subdivided tessellation which can efficiently and rationally generate the structural facade design in practical use). And iterative processes are the bottom-up processes such as recursive iteration (e.g. simulation of the growing structures, Agent based algorithm, Physical dynamics, the methods much closer to the nature phenomena).

"igeo" deserves to be praised for paving the way for the tandem with "Rhino" and "Processing". But although it seems unavoidable for its design concept, there is less room for more extension with other libraries for Processing. It seems to be quite closed in its system. Therefore, judging by criteria of this competition "Deep thought and path-breaking methods", it seems that this Processing library is inadequate to hack the next horizon.

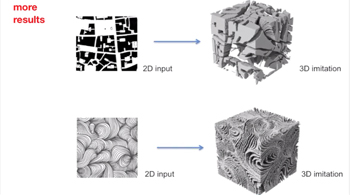

Imitation

Program specifications

ALGOrithmic Design type

Researcher

Southeast University, Architecture, lecturer

Review : Toru Hasegawa

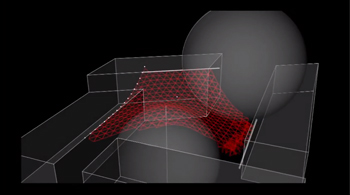

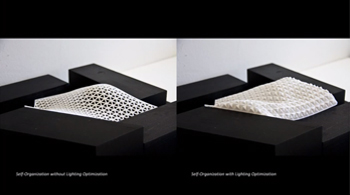

Agent Freeform Gridshell Generation

Program specifications

ALGOrithmic Design type

Researcher

University of Stuttgart, Architecture, Research Associate

Program has been published

lecture symposium exhibition

Acadia 2013 - Poster Presentation

group members

Marco Baur

Review : Taro Narahara

There are many entries that have attempted to apply self-organizational principles in nature to architectural design generation processes, and this work demonstrated one of the most distinctive and successful representatives of this effort. After the competition, I realized that the contestant is from a group at the University of Stuttgart that has been actively investigating self-organizational characteristics through experimental structures and materials. Some of their works, among those I know of, rely partly on physical material properties or structural systems performance to acquire self-organizing behaviors. However, the contestant added purely computational agents for the investigation of the same primary topic area of the group and has created an interesting hybrid that appears to be slightly different from the group’s known mainstream direction.

What I was impressed by the most in this project was its superb representation. The animation depicting the dynamic agent-based self-organizing process was exhilarating, and the rich slide presentation visually explains complex processes well. The final outcome can be seen as a result of original design research rather than as a software development. I speculate that some structural optimization methods internally use similar logics to some extent: heuristic methods such as a particle swarm optimization and a GA are often used for structural optimizations by engineers. I would like to leave the inquiry about the true originality of this algorithmic method to structural engineers. However, the contestant visualized normally inert and static building components as if they are living entities in a real-time dynamic manner, and it is a contribution to represent them in such a unique animated way.

I speculate that the true impact of the tool can be represented better through more strategic selection of conditions that can capture the full advantages of the tool, and such conditions may not be conventional ones. In the contestant’s slides, he argued that “the conventional top-down process” has some shortcomings. But the selected default site condition in the software and the resulting truss configurations seem still to be within the reach of existing tools’ capabilities. Aerospace engineers developing reconfigurable swarm robots for a mission to Mars makes sense since the robots require greater flexibility and adaptability for tasks where limited cognitive capabilities are available. A similar rational can be given to this project. Inclusion of unique conditions where this unusual tool’s capabilities become more meaningful and clearer seems a logical next step for this tool, and this step might be able to find even more provocative results than regular trusses spanning walls. Sometimes, users of software can help find such conditions spontaneously through well-prepared user-interfaces. The online puzzle game about protein folding called “Foldit” has discovered several structures that had been unaccomplished goals for many years for scientists through the help of online players. Improvement of the user interface could help the advancement of the research as well.

Overall, I was fascinated by the contestant’s outstanding representations and problem-solving approach, and I considered that its original aspects surpassed the quality of several other well-balanced submissions based on either known or already well-introduced algorithmic methods.

Public Mall

Program specifications

ALGOrithmic Design type

Student

Keio University, Graduate School of Media and Governance

group members

Yusuke Fujihira

Review : Benjamin Dillenburger

The ALGODeQ competition is searching for creative work to enable solutions to problems, astonishing forms or contributions to society and human culture.

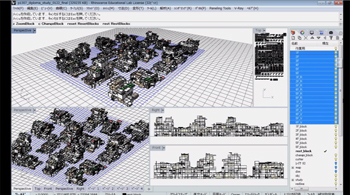

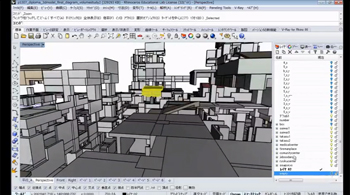

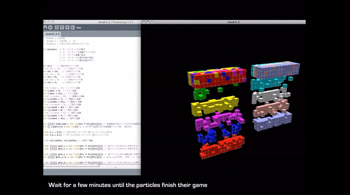

The entry “Public Mall” is described as a “design democratization system”. As the author is searching for new ways of participatory design, he doesn’t present one specific design solution but instead proposes a new design method. The background of the project is his observation that the maintenance of existing, decentralized public facilities in Japan will demand large investments in future. As an alternative to their renovation, the author proposes the dismantling and reconstruction of centralized public buildings through a new design and fabrication strategy. The existing buildings are cut into cubical, structural modules of five-meter side length. A catalog of thousands of those individual parts forms the basis for a combinatorial design system. Architects and citizens should design the public buildings in a discursive democratic process through selection and combination of these components.

The modular approach of the project resembles the design system introduced by J.N. Durand or Froebel’s building blocks . Following a grid-logic and rules of composition, predefined components allow the design of a multitude of new design solutions. The difference is that in the approach of the Public-Mall project, these modules are recycled “objets trouvés”, and as such irregular in their appearance.

In the presented approach the computer is more than a pure drawing tool. Algorithms are innovatively employed for the creation and administration the database of the cutouts, as search tool and instrument for design-collaboration and visualization.

The proposed system surprises with the impressive variety of spatial configurations and avoids the monotonous character of other grid-based systems. It is a creative architectural invention allowing a modern reinterpretation of the concept of “spolia”. In this sense, it successfully reaches the declared goal of the author to generate unexpected constellations and to allow the search for unimaginable shapes. The intriguing visualizations underline the idea of habitants occupying such heterogeneous spaces.

A strong aspect of the project is the combination of an algorithmic strategy with both formal and social aspects of a democratic design approach. The methodology for the recycling of the existing buildings structures is interesting from an economic and an ecologic perspective.

This integrative aspect can be criticized as well. The project might be based on too many assumptions, regarding both the technical feasibility of such a large-scale puzzle system and the acceptance of a participatory design process. Each of these topics is challenging enough to be examined separately. Although the project is unique in its specific strategies, precedent studies of structuralism in architecture could contextualize the presented ideas.

While the code itself is sophisticated, the choice of rhino for a real-time customized design tool can be questioned. From this point of view, it would be interesting to see such a speculative design scenario as a multi-user online-application. This could emphasize the democratic aspects of this visionary approach.

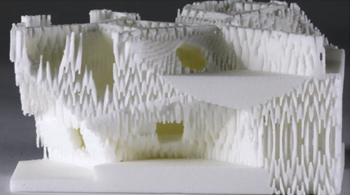

3D PRINTING HOUSE

Program specifications

ALGOrithmic Design type

Student

ETHz, Architecture

Review : Shohei Matsukawa

Street View

Program specifications

ALGOrithmic Design type

Office

hclab, urban planning, researcher

group members

sota ichikawa, takatoshi arai

Review : Urs Hirschberg

First of all it makes graph analysis methods available in an easy and playful way. Any street pattern can be loaded into the program as a 3dm (Rhino) file. The program checks whether it represents a proper graph network (all nodes must be connected by at least one possible path). Now it’s fun to analyze and explore the network for example by highlighting the shortest paths between nodes or by coloring its nodes according to different centrality calculations. It’s fun because it can be done so quickly. It even works on a touch-screen. But the program also has some nice features such as time distance maps in which geometry is distorted according to how long it takes from any point on the map to get to a chosen goal. It also explores new types of centrality by using an eigenvector calculation (Google’s page-rank algorithm) to analyze and color nodes. The charm lies in how easily different scenarios can be explored. What if there is an additional connection here? What if this street is closed? What if this bridge is destroyed? - The program provides quick answers. Its analysis can be saved as images or on separate levels in the vector file. Street view is a useful and clever little program that does one thing and does it well and can be very useful in city planning.

c-eyes

Program specifications

ALGOrithmic Design type

QA category only

Office

doubleNegatives Architecture Ltd., design, director

Review : Benjamin Dillenburger

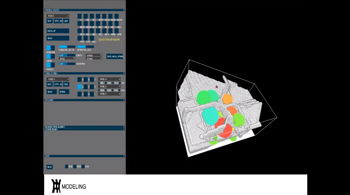

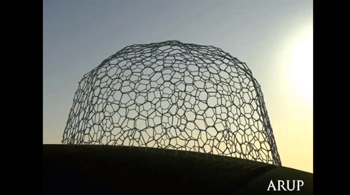

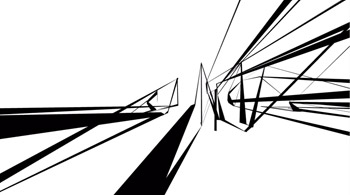

The ALGODeQ competition is searching for creative work to enable solutions to problems or astonishing forms. The entry “c-eyes” presents a form-finding software for architectural frame-structures. Multiple constraints and optimization criteria are integrated: the site- boundary, fabrication constraints, wind loads, sun-position and building codes are mentioned by the authors. The approach of the authors combines a bottom-up with a top-down strategy. The software tries to find an optimized balance between the individual “desires” of each node of the frame-structure and design-criteria for the overall structure. The goal is to create an organic design-system comparable to the human-body “which has autonomous cell neighbor relation and top-down brain command.” The building should be designed from the description of relationships between the elements. The software is successfully applied in the design of a small house, which is described by photos and text.

Both components of the project - the developed software and the house - are presented in an appealing way. The graphical user interface of the software is well designed, and its minimalistic layout is convincing. This encourages the user to play with the many parameters and settings to explore the design space. The selection of C++ as software platform and the integration of existing geometrical libraries such as CGAL seem to be an appropriate choice for the application, as the performance of the test version was very good.

While other approaches often include only one or two individual design criteria, the c-eyes software can handle a multitude of relationships. In this regard, the project would benefit from a contextualization and comparisons with existing approaches such as Greg Lynn’s Embryonical House and the Induction Design by Makoto Sei Watanabe.

In contrast to the otherwise extensive documentation of project and software, the methodology for optimization could be described more in detail. Specifically, it remains unclear how the individual criteria are weighted. A discussion of the design of the solution-space would be helpful. In addition, more information about the fabrication-process of the building would be very interesting.

The strongest argument for the proposed approach is the realized building itself: it is one of only a few algorithmic architectures that have actually been built. This project successfully overcomes the challenges from the transition from conceptual idea to a real-world application. While the overall idea might not be without precedents, the precision and the consistency of the process is impressive. This building is not directly designed but rather grown like a tree, which fits well to the green surrounding of its context.

Although the resulting architecture itself has a high architectural standard, it could still be designed in a traditional way. Nonetheless, the quality of the project makes one curious about further applications of the software. A larger building with even more challenging constraints and structural members would certainly demonstrate the power of algorithmic design even stronger and could lead to more surprising results.

The project c-eyes is an early, successful instance for a new direction in the discipline of architecture. The architect no longer designs by drawing the building, but by designing processes - the algorithms – that in turn generate the building.

Shift Frame Solver

Program specifications

ALGOrithmic Design type

QA category only

Office

Hiroshi Sambuichi Architects, N/A, Principal Architect

Program has been published

lecture symposium exhibition

Algode2011

group members

Kazuma Goto, Ryota Kidokoro

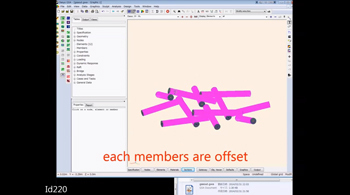

Review : Chen-Cheng Chen

All along, Reciprocal Structure has been tried in different cultures, and it is a structural theme that is followed closely by many builders. Project ld220, reciprocal structure by computer simulation, was a very interesting attempt. Personally, I think that reciprocal structure by computer simulation might as well consider the problem of the curvature of curved surfaces. When the slope of the tangent is above a certain range, the structural rods are unable to snap together, and because of the force of the gravity, bracing rods easily fall, so we handled the connections in other ways. Therefore, reciprocal structure, under a greater curve ratio, becomes more like a net structure, which is closer to how this project was presented in the end.

In simulation calculations of reciprocal structure, the design of snap connections is extremely important. Moreover, for the site layout after reciprocal structure calculation, the marks of each rod deserved special attention because they were difficult to calculate in 3D. Finally, the reciprocal structure was very difficult to set up. The setting up of the arrangement of rods exceeded the limits of what a computer is normally able to calculate. It was more appropriate to see this project as a net structure. After the initial gridding, ld220 used shift frame to adjust the placement of the rods. Then, after the calculation of a large-scale grid, the position of steel tubes and placed small wooden rods fixed into the steel net grid. It was very challenging to set the layout and construct this project, but the placing the rods in the space after calculation on the computer was very special. If this project were able to give more details about setting up the layout, and how to design and put together the small parts of the rod connections, it probably would have been more interesting. Overall, however, this project was interesting, as it developed a final structure with appropriate calculations, and allowed us to understand a very complex form resulting from simple calculation principles. Bravo!

Shortlisted

QUE

Program specifications

ALGOrithmic Design type

Researcher

Shibaura Institute of Technilogy, Graduate School of Engineering and Science, postdoc

group members

Akio Ota, Hiroki Inagawa

Review : Theodore Spyropoulos

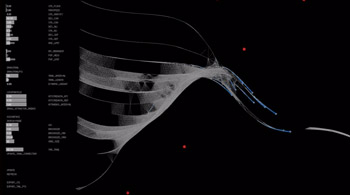

The submission poses a research question that focuses on urban issues within a city context through the activities not objects. The analogy is to correlate activities as a generator for what the author aims to propose a means towards design. There is an attempt to mention Kenzo Tange and Kevin Lynch within a history of experiments that aims to see the city through this filter. This unfortunately is not extracted in detail, which would have been a very useful in creating categories of interactions rather than codifications of characterizations of quantifications. The agent based rule set is a method which lends itself to an high population of event based understandings. These rules of information synthesis that would be generated would have to be targeted with certain behavioral features that would allow the complexity of this to build information rather than lose information. John Henry Holland would be someone that the author should investigate and to qualify beyond visual mapping how this translates into meaningful design input. Research that has been applied within the city can be seen in case studies such as Singapore. At the moment this step, which is critical to the research, is tentative at best.

There is a seed of an exciting project but there is some generalization identified by the authors layering system and agent codification, which loses information and synthesis through the setup. There is a translation of the information in a relative manner that needs to be targeted towards a design vision that challenges the conventions of what is stated as “objects”. It would be of interest for the author to make distinctions between activities and events in the city. There is a discourse there that may be helpful to outline the motivation of the work. Tschumi looked a program through a purposeful hybridity that was design enabled though one could question if this was event oriented. Allan Kaprow spoke of issues of the happening, which could be explored, which were more chance operations within the environment. The research is worth continuing but will need conceptually much higher attention of the problem beyond quantification.

Complex Morphologies

Program specifications

ALGOrithmic Design type

Office

SoomeenHahm.com, Architecture, Director

group members

Igor Pantic

Review : Michael Hansmeyer

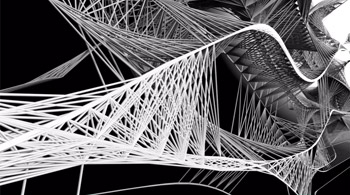

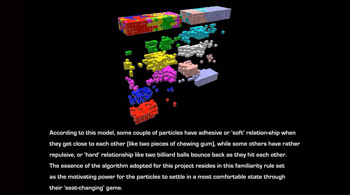

The Complex Morphologies project explores the use of agent-based design systems. It uses these systems to create highly differentiated structures with complex geometries. The project expands on existing agent-based systems by focusing on environmental interaction. It also incorporates a physics library that can address questions of structural stability.

An elaborate simulation environment is set up in Processing, controlled through a central interface with some two dozen parameters. Initial output is directly visible in the program itself, or can be exported as files for various rendering applications.

Complex Morphologies presents a series of tests performed by altering parameters such as attractors and agent flocking behavior. It shows how the system can be adapted to control - among other things - the density of output. The output is presented as elegant diagrams and sophisticated renderings.

It would be interesting to see a proposal of how these agents can be applied in an architectural setting - as this is the project’s stated aim. In doing so, the author(s) may consider exploring additional means of translating agent movement into a structural system or a system of surfaces. Extruding along an agent’s path is only one of many possibilities. For instance, lofting between paths, or placing objects between paths could be options as well.

The author(s) may also experiment more with the agent behavior. For instance, multiple species of agents are conceivable, each with it’s own attributes and goals. Alternatively, environments that are more malleable and invite more interaction could possible as well. These types of experiments would help to further the body of research beyond the scripts that the author(s) used, which have for a large part been previously established.

Probabilistic Instrument

Program specifications

ALGOrithmic Design type

Student

ETH Zurich, CAAD

Review : Sean Hanna

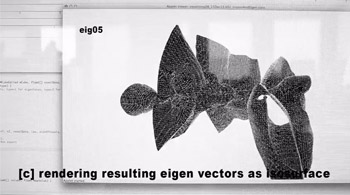

This project creates a continuum of geometric forms based on a set of initial ‘seed’ geometries, by interpolating between them in a high dimensional space of voxel representations. It bases this on the eigenvectors of the ‘seeds’, or principal components, a method that is more typically used for analysis of data, rather than generation of form. A distinguishing feature of this work is that the form does not come so much from the algorithm, but more purely from the data itself. The function of the code, as analysis even more than synthesis, is a reversal of what can be seen in so much of computational work. While in many cases algorithms are used that incorporate data, or use it as a basis for form, the data is used only to drive a model that already embodies the design intent; parametric models, for example, are the design which the data only tunes. Here, a large set of data becomes the space of possible relationships and the final form.

As the authors state, the work creates a space of possibilities, but it also provides a means to navigate them. Despite the high dimensionality involved, this space and the means of navigation turn out to be surprisingly intuitive. The forms themselves also seem to be both familiar in their relationship to the known seed forms, while also novel and surprising. It is this combination of factors that make the use of such a tool a satisfying experience, and of potential relevance in design.

Are the resulting forms useful as designs? This is difficult to tell. At present the algorithm seems intended for early stage idea generation and inspiration, from which geometry might be extracted for further work. It is conceivable that with a more structured representation of geometry than voxels more rational models of form could be driven in the same way. The method could also potentially incorporate other kinds of data, including non-geometric variables. This project is original in concept and its biggest contribution is likely to be as a ‘seed’ in itself, for the development of these sorts of techniques in the future.

Boot The Bot

Program specifications

ALGOrithmic Design type

Researcher

TU Graz, Institute of Architecture and Media, Assistant Professor

Program has been published

lecture symposium exhibition, website, book magazine, peer reviewed paper

ICCRI 2012

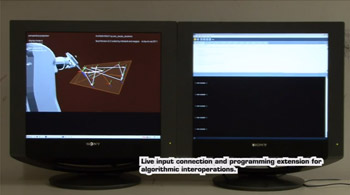

Review : Sean Hanna

This low-level control system for ABB robots is in one of the more challenging areas of design related computation by engaging with the real, physical processes of direct fabrication. It proposes a quite generic and multipurpose toolkit to interface with the machine at a deeper level than many existing software control systems, and is therefore also more focused on the algorithms than the final product. It has at least two main contributions: first, it serves as a platform on which higher level algorithms can be placed, as evidenced by examples of projects ranging from complex timber joinery to painting images; and perhaps more importantly, it proposes a control system that may be better suited than existing proprietary software to the particular requirements of making.

On this latter point, it helps to dispel the illusion that there is such a thing as a general-purpose tool. The software submitted does not rely on standards proposed by the maker of the equipment for its intended purpose, which may not align with the final purpose of the user. As just one example, the platform is open to real time processing, which can allow for live feedback. This is not well accommodated by many proprietary systems, which have as their goal simply to convert finished geometric specifications to a set of tool paths that approximate that geometry. The ability to react, change paths, adapt to unforeseen changes in surroundings, materials, etc., are all things that we take for granted in human craft, but are only just beginning to be explored in industrial robotics. They are most important in industries like architecture, and this sort of system, which allows for bottom-up innovation, is the way to address them.

It can also make an important contribution to education. Standard systems are designed with for the purpose of taking a CAD file in and sending real, physical geometry out. This process is generally invisible to student, furthering the impression that the tool is generic. Making the process open to the new user is important for education now, and for any future innovation in both design and technological development in future.

Other control systems exist for CNC and robots in general, and specifically for ABB, so although this project has many advantages over its competitors it is not entirely unique in this sense. This also should be seen as an advantage, for as a contribution to a burgeoning movement for better quality understanding and control of fabrication, it has the capacity to make a greater impact on practice, and open source development as proposed here is likely the best way to do this.

EAST & WEST

Program specifications

ALGOrithmic Design type

QA category only

Office

Atelier NORISADA MAEDA, the department of design, architect

Review : Toru Hasegawa